Part I

Types of Testing

# Two types of Testing

• White Box Testing

• Black Box Testing

# Black Box Testing

• Basically used and implemented to test for the functionality of a module/ program/

application, with no knowledge of internal design of the product/ application.

• Tests are primarily based on requirements and functionality.

# White Box testing

• Also called Glass Box, structural, Clear box or Open box testing,

• In white box testing, the internal logic is given due importance,

• Tests are based on coverage of code statements, branches, and conditions,

Black Box testing

# Advantages

• Efficient when used on larger systems,

• Tester and Developer work independently, hence test is balanced and unprejudiced,

• Testing and Tester can be non-technical,

• Tests are conducted from the end-users viewpoint,

• Identifies the vagueness and contradiction in functional aspects,

• Test cases can be designed as soon as functional specifications are complete.

Black Box Testing

# Disadvantages

• Test cases are tough and challenging to design, without having clear functional specifications,

• It is difficult to identify tricky inputs, if the test cases are not developed from functional specifications,

• Limited testing time, hence difficult to identify all possible inputs,

• Chances of having unidentified paths during testing.

White Box testing

# Advantages

• Test is accurate since the tester knows what individual programs are supposed to do,

• Deviation from the intended goals as regards the functioning of the program can be checked and verified accurately.

# Disadvantage

• Requires thorough knowledge of the programming code to examine the related inputs and outputs.

White Box Testing (Pre-requisites)

• The key to undertake any White box testing is to understand the System architecture.

• The System Architecture documents should form the basis for identification of Systems, Sub-systems and generation of test cases.

Identification of Test Items

• The Identification of Test Items should be done based on the specifications of the

product/ application

Specifications required

• The specifications required for identification of test items are

• Functions of the system (List of functions)

• Response criteria (bench marking and stress testing)

• Volume constraints (number of users, hits, stress testing)

• Database responses (flushing, cleaning, update rates)

• Network Criteria (network traffic, choking)

• Compatibility (environments, browsers)

• User Interface / Friendliness

• Modularity (ability to easily interface with other tools)

• Security

Criteria for Test Cases

# Each test case document/ item should have the following features

• It should be uniquely identifiable

• It should be unambiguous

• It should have well-defined test data or data patterns

• It should have well defined PASS/ FAIL criteria for each sub-item and Overall criteria for PASS/ FAIL of the entire test.

• It should be easy to record

• It should be easy to demonstrate repeatedly

What White Box testing contains?

• White Box testing basically strives to attain Code coverage.

• How can we obtain and achieve good code coverage?

Basic Path Testing

• It is a mechanism to derive a logical complexity measure of a procedural design

and use this as a guide for defining a basic set of execution paths.

• With these test cases, we can ensure that the BASIC SET will execute every statement at least once.

Flow Graph Notation

• A notation for representing control flow similar to flow charts.

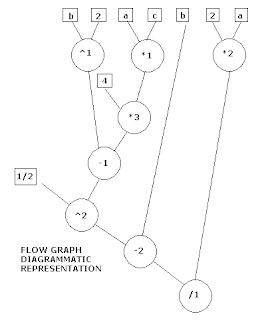

Example (Flow graph notation)

# Eg.

X = ((b2 -4ac) ½ -b )/ (2a)

Can be represented using functional notation

div(sub(pow(sub(pow(b,2),mul(4,mul(a,c))), ½),b), mul(2,a))

The flow graph diagrammatic representation is given below.

Cyclomatic Complexity

• It gives a quantitative measure of the logical complexity.

• On obtaining this value, it is found to give the number of independent paths in the basis set, and an upper bound for the number of tests to ensure that each statement is executed at least once.

• Cyclomatic complexity essentially represents the number of paths through a particular section of code, which in object-oriented languages applies to methods.

• Cyclomatic complexity's formal academic equation from graph theory is as follows:

CC = E -N+ P

where E represents the number of edges on a graph, N the number of nodes, and P the number of connected components

public int getValue(int param1)

{

int value = 0;

if (param1 == 0)

{

value = 4;

}

else

{

value = 0;

}

return value;

}

# Note that there are two decision points:

an if and an else. (which accounts for 2 CC)

In addition the method's entry point automatically adds one, the final value equals 3.

Hence Cyclomatic Complexity is 3

Cyclomatic Complexity (Ignored facts)

• As the cyclomatic complexity of a method is fundamentally the number of paths contained in that method, a general rule of thumb states that in order to ensure a high level of test coverage, the number of test cases for a method should equal the method's cyclomatic complexity. Unfortunately, this bold statement

often is ignored.

Control Structure Testing

# Can be achieved by

• Conditions Testing

• Data Flow Testing

• Loop Testing

Conditions Testing

• Condition Testing aims to exercise all logical conditions in a program.

• The Conditions to be tested may be

- Relational Expressions (a operand b), where a and b are arithmetic expressions,

- Simple condition (Boolean variable or relational expression)

- Compound condition: made up of two or more simple conditions, boolean operators

Data Flow testing

• Data Flow testing is done based on the selection of test paths according to the

location of definitiions and use of variables.

Loop Testing

• Loop structures are fundamental to many algorithms.

• Loops can be defined as Simple, Concatenated, Nested, and unstructured.

Important tip for Loop testing

• Note that unstructured loops are not to be tested.

• They need to be RE-DESIGNED for better performance.

TEST AREAS

Unit Testing

• During the course of testing, We find that various constituents of the system are tested in increasing degrees of granularity. i.e. We test from a component (smallest) to the full system.

• A Component is the smallest unit for testing.

Component

• A Component is an independent, isolated and reusable unit of a program that performs a well-defined function.

• Most components have public interfaces that allow it to be used to perform its functions.

Module

• A module comprises of one or more components to achieve the business function.

• The module encapsulates the functionality of its components and appears as a “Black Box” to its users.

Sub-System

• Sub-Systems are defined as heterogeneous collections of modules to achieve a business function.

Eg. A banking application might interface to a signature reader, Accounting mechanism, and a database component to perform banking related operations.

System

• The full system uses multiple subsystems to implement the full functionality of the Application.

Functional Test areas

• Security

• Network resources

• Capacity

• Scalability

• Reliability

• Burn-in

• Clustering

Security

• To address Security issues, following aspects are tested.

• Authentication (The authentication mechanism is tested based on class of user and password validation)

• If third party products such as LDAP servers are used, those are tested as well.

• LDAP, Lightweight Directory Access Protocol, is an Internet protocol that email and other programs use to look up information from a server

Data Security

• Flow of information as well as firewall access issues are tested for all aspects of the system under test.

• If data encryption is being used, it is tested for compatibility with standards.

Network Resources

• The factors to be tested as regards Network resources are

• Bandwidth

• Application dependency

Bandwidth

• System behavior is tracked by varying the bandwidth available on the network from

1Kbps to 10Mbps.

• Parameters such as timeouts and breakdowns of operations are monitored.

Applications dependency

• If the System under test has dependencies on network applications like SNMP, these are tested

• SNMP are tested for failover and recovery.

• SNMP (Simple Network Management Protocol)

SNMP

• It is an application layer protocol that facilitates the exchange of management

information between network devices.

• It is part of the Transmission Control Protocol/Internet Protocol (TCP/IP) protocol

suite.

• SNMP enables network administrators to manage network performance, find and solve

network problems, and plan for network growth.

Capacity

• Capacity testing looks at resource demands for each of the test vectors in various parts of the system.

• The test vectors are designed to reflect normal usage in terms of workflows and

bandwidth requirements.

Capacity test

• The basis for a capacity test is a thorn for most organizations, because they lack test requirements.

• Factors that affect the applications capacity in terms of response time and performance are

- Inefficient SQL statements

- Hardware equipment

- LAN/ WAN

- S/W version

• Underlying database system and database design

• Customization carried out for the Application

• Adding end-users from a previous release in a

production environment

Scalability

• This aspect of testing recognizes the potential for increases in scalability from a

system architecture, design, development, and deployment viewpoint.

• The application should be tested for the ability to scale linearly by the balanced

addition of computing (N/w, CPU, Memory, Disk etc) resulting in a proportionate increase in capacity at the same performance level.

Reliability

• The purpose of this test is to ensure that the Application under test performs in a

consistent manner.

Burn-in

• In this testing, the system or subsystem under test is run at full and partial loads over an extended period of timeto test for consistency.

Clustering

• For systems, that use clustering support, one or more systems are removed from the

installation on an operational system to check for system recovery, and verify fail over to the backup system.

• The application is tested for damage or compromise of data.

Test Case Designing

Test Case Design

# The design of tests is based on the following

• Test Strategy

• Test Planning

• Test Specification

• Test Procedure

Designing Unit Test cases

# To ensure that Unit testing is done correctly, ensure the following steps

• Step 1 : Make it run

• Step 2 : Positive Testing

• Step 3 : Negative Testing

• Step 4 : Special considerations

• Step 5 : Coverage Tests

• Step 6 : Coverage Completion

# Step 1 : Make it run

• The purpose of the first test case in any unit test specification should be to execute the unit under test in the simplest way possible.

• Suitable techniques

• Specification derived tests

• Equivalence partitioning

# Step 2 : Positive Testing

• Test case should be designed to show that the unit under test does what it is supposed to do.

• Suitable techniques

• Specification derived tests

• Equivalence partitioning

• State-transition testing.(using Start, input, output, finish)

# Step 3 : Negative Testing

• Existing test cases should be enhanced and further test cases should be designed to

show that the software does not do anything that it is not specified to do so.

• This will mean incorporating Negative Testing to ensure the same.

• Suitable techniques

• Error guessing

• Boundary value analysis

• Internal boundary value testing

• State-transition testing

# Step 4: Special Considerations

• Test cases should be designed to address issues such as performance, safety

requirements and security requirements.

• Particularly in the cases of safety and security, it should be possible to give test cases special emphasis to facilitate security analysis or safety analysis and certification.

• Suitable techniques

• Specification derived tests

# Step 5 : Coverage Tests

• The test coverage likely to be achieved by the designed test cases should be visualized.

• If found wanting, further test cases can be added to achieve specific test coverage

objectives.

• Suitable techniques

• Branch testing

• Condition testing

• Data definition-use testing

• State transition testing

# Step 6 : Coverage Completion

• This step takes into consideration the organization standards for the specification of a unit.

• There are likely to have human errors made in the development of a test specification.

• There may be complex decision conditions, loops, branches within the code for which

coverage targets may not have been met on test execution.

# Step 6 : Coverage Completion

• When coverage objectives are not achieved, analysis must be conducted to determine,

Why?

Reasons (Coverage Completion)

• A coverage objective may not be achieved due to

• Infeasible paths or conditions : Provide justification why the path or condition was not tested.

• Unreachable or redundant code : correct the code by deleting the offending part of the code.

• Insufficient test cases : test cases should be refined and further test cases added.

Coverage Completion

• Suitable techniques

• Branch testing

• Condition testing

• Data definition use testing

• State transition testing

Test Case Design Techniques

• The techniques for designing test cases can be clubbed into two broad categories.

• Black box techniques

• White Box techniques

Thank you for your wonderful

patience

No comments:

Post a Comment

Drop in your comments/ feedback